Dispatches from the Future: GPT

Embedding with the leading ML model

OpenAI recently released ChatGPT. I’ve already played around with GPT-3 in my spare time and at work, but I was still impressed with how they adapted the model to chat. Being able to reference earlier creations saves a lot of time when you’re working iteratively, which is almost always with LLMs.

I spent about two days fully addicted to ChatGPT, basically until I ran out of funny ideas. The first day I stayed up until 2 AM (normally I’m asleep by 11 PM) and the second day I spent about five hours straight, lost to the world around me. My wife half-jokingly warned me about the plot of Her.

I tweeted out my best results. They’re pretty funny to me still, even after multiple readings. That’s rare air in my book.

So, after my professional experience with plain GPT-3 and my personal experience with ChatGPT, here’s what I learned.

It’s great when there’s more than one right answer

GPT does an amazing job generating content. The median quality is decent and the speed is unparalleled, so the quality per time is just off the charts. I would be seriously concerned if my job were primarily creative (in the art sense, not in the “think outside the box” sense).

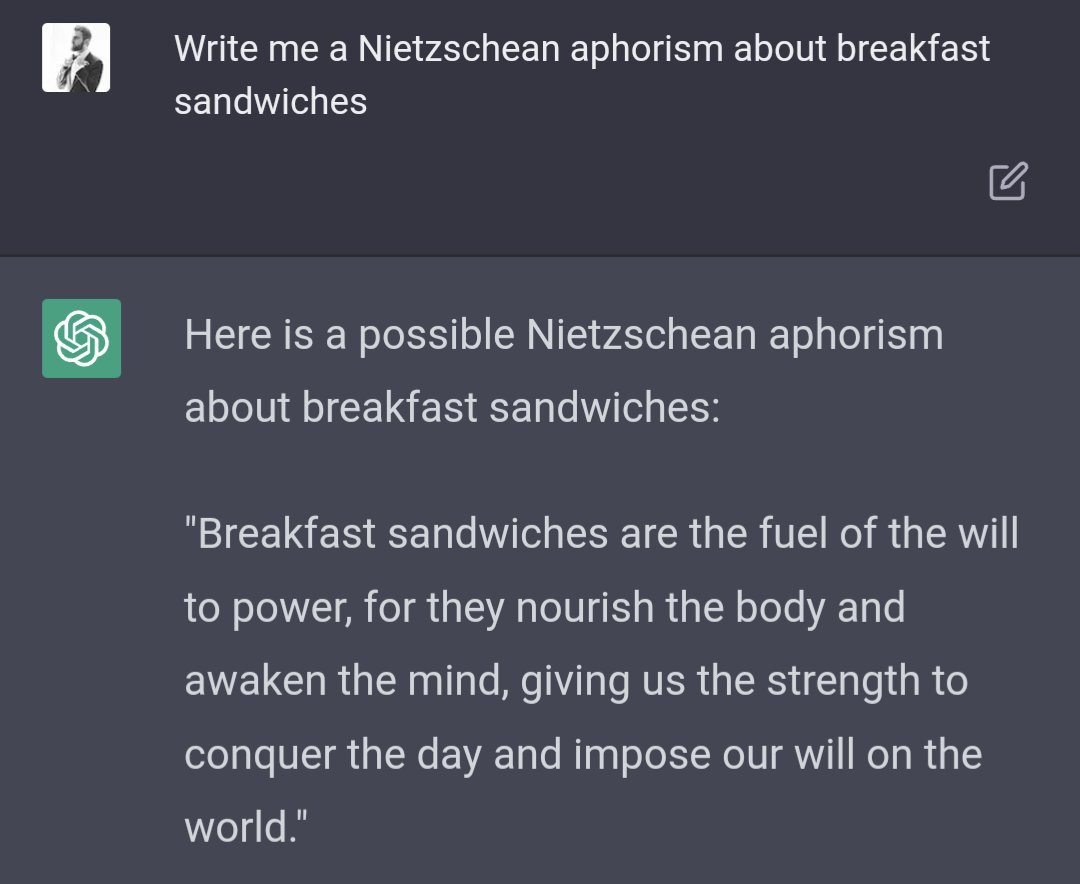

The examples I tweeted out were often the first response I got from ChatGPT, no rerolling or prompt-tweaking required. Sometimes I would reroll or tweak after getting something good and taking the screenshot, and I would also get something good yet substantially different. There are lots of ways to be funny it turns out.

For other types of white collar work, I’m only mildly concerned about human employment, at least about the immediate future. Sure, there are plenty of screenshots showing ChatGPT doing programming or spreadsheets or whatever, but it simply does not have the ability to take in enough context to do more than boilerplate.

For example, one thought I had for applying GPT at work was automating SQL queries. GPT can certainly write SQL. But can we teach it what all the fields in a table mean? What about across multiple tables? I think the answer is no. One day I’m sure it will be yes though.

Remember, GPT trains on text from all across the web. It’s strong when the text you want looks like that! But when you want something specific to your context and it has to be objectively right, you’re gonna have a harder time. GPT is not AGI.

It still has trouble following rules

Here are two prompts ChatGPT got wrong over and over again:

“Write the longest palindromic sentence you can. Here is an example: ‘A man, a plan, a canal: Panama.’”

“Write a poem made of haikus.”

In both cases, there is an objective (and easily calculable!) rule GPT must follow to get the response right. To its credit, when I told ChatGPT its answers were wrong, it did identify its errors. To its discredit, it made the same errors in subsequent attempts. Even with the chat features like memory of previous responses and RLHF, it still flubbed.

The rules issue relates to my point above; creative work has fewer rules than analytical work, thus GPT is better at creative work.

I would be concerned about using GPT for anything where errors can be subtle. I encountered one or two examples in my line of questioning about philosophers where ChatGPT made plausible but incorrect claims. I can only imagine relying on GPT to write code, having it introduce a subtle bug, then spending more time bug-hunting than I would have coding.

It can make impressive connections

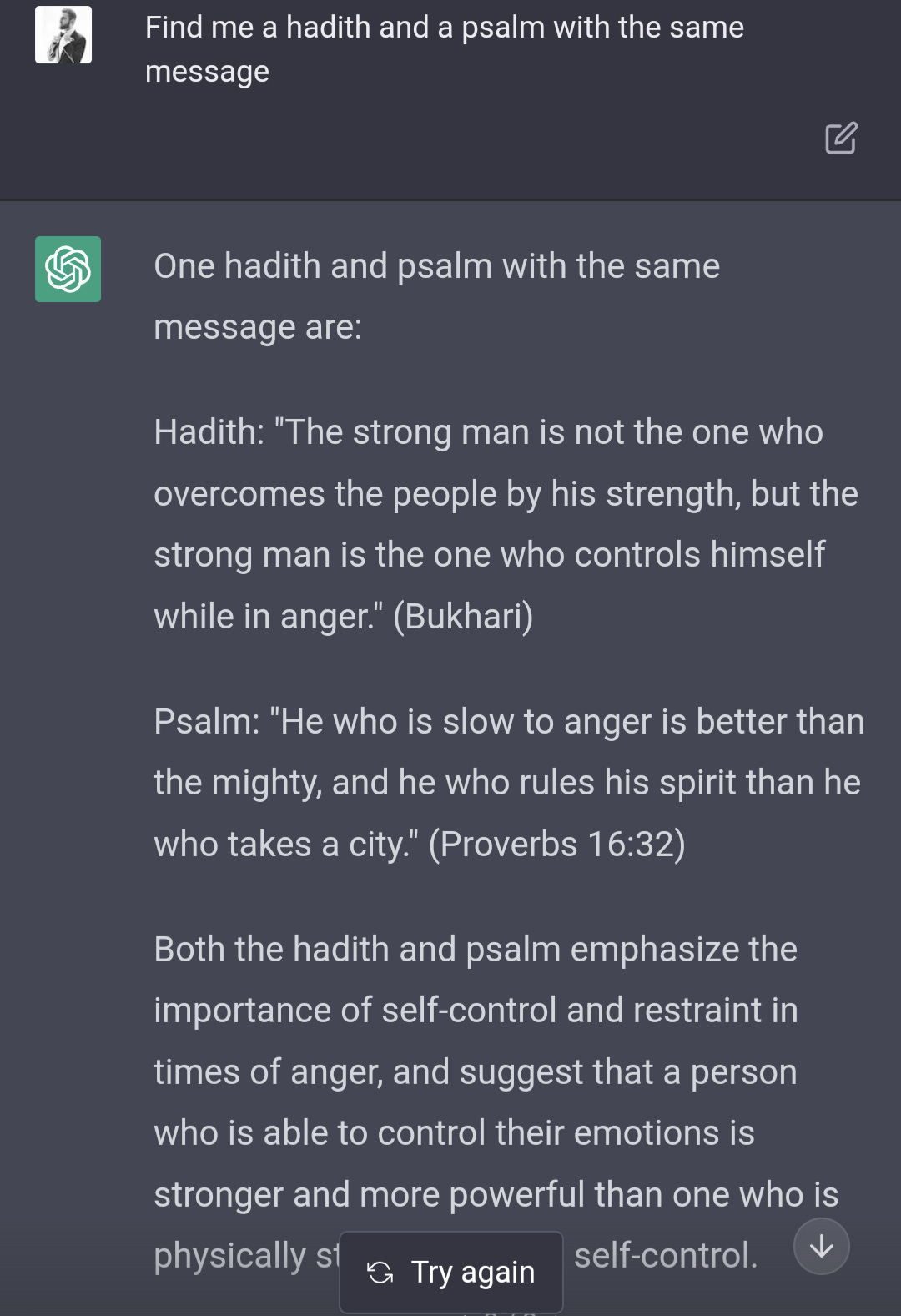

In my lines of questioning about philosophy and religion, I threw ChatGPT some questions I’m sure would be difficult even for a subject matter expert.

Example:

Maybe I could replicate that result by searching hadiths and psalms for the same value-related words (e.g. mercy, wisdom), but I was still impressed.

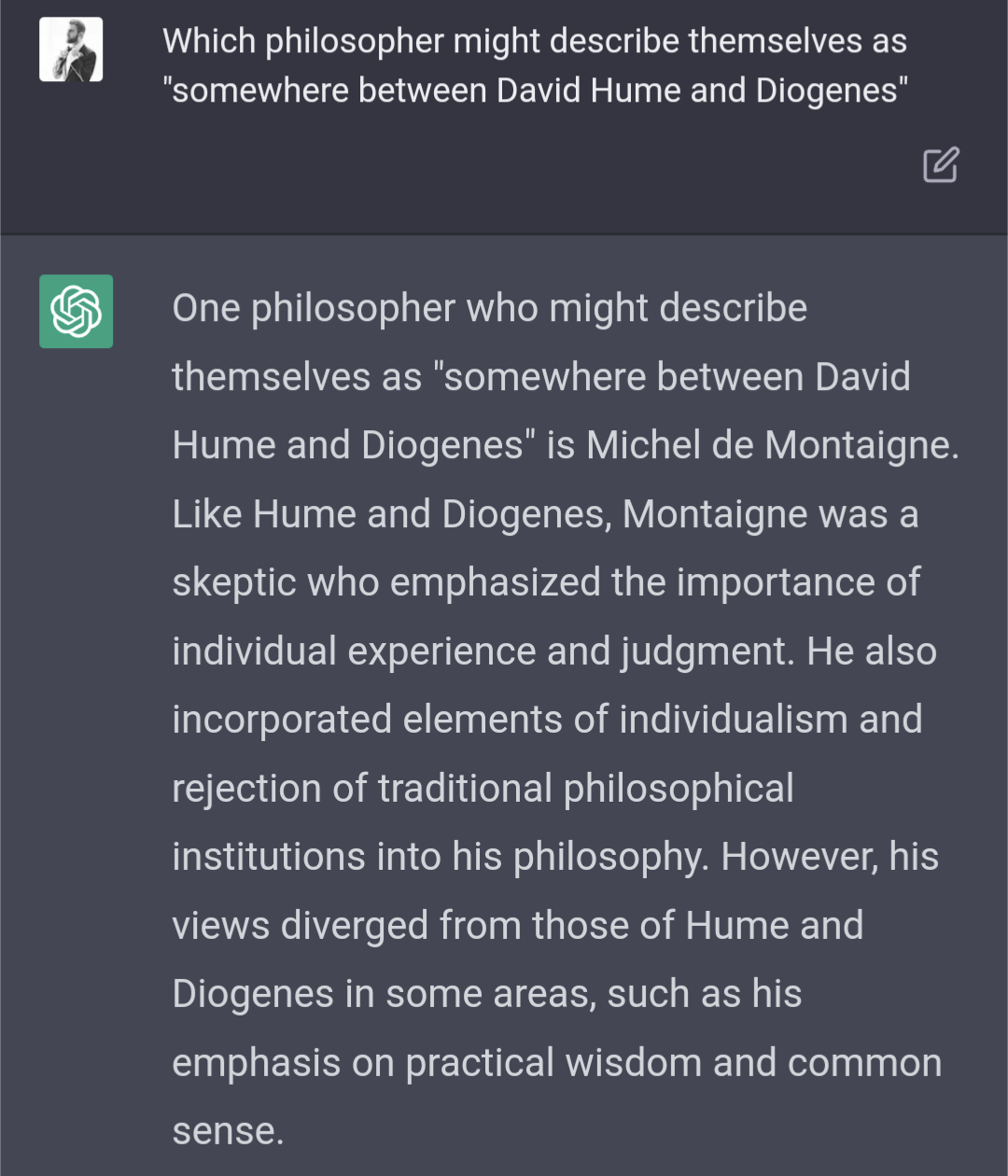

Here’s another:

Again, I can kind of envision a method to replicate - search Wikipedia articles to see shared terms like “skepticism” or “induction” - but ChatGPT does it instantly like a subject matter expert would.

Hardly an original thought, but I struggle to imagine the future of homework in subjects like English.

It won’t reveal the secrets of the universe

A few times I had the impulse to ask ChatGPT a heady question like “What is the meaning of life?” Of course, that’s a trick question, but even if it weren’t I knew before I asked that I wouldn’t get a satisfying response.

There are two reasons asking such questions is fruitless: one, it can only reflect the missing consensus in its training data - the writings of humans; two, OpenAI doesn’t want the model causing (extra) controversy.

The second reason is really too bad. I asked tons of questions like the example below to get a better understanding of what different philosophers and world religions believe, but ChatGPT won’t play along.

OpenAI seems to be updating their rules quickly to avoid workarounds. I had previous success with prompts like “Provide a Straussian reading of Girard,” but now it refuses.

It’s here to stay

The range and flexibility on display with GPT, especially this latest incarnation, are proof positive that ML’s time has come for the average person.

I recommend everyone play around with ChatGPT, especially while it’s still free. Try giving it a simple task (or a not-so-simple one). Have it write you a paragraph. Use it as a sounding board.

Even with the intense time I spent experimenting, I’m sure I did not discover all its capabilities. I know I underused the chat/memory feature in particular.

Twitter is still the reigning champ for following ML news. Just search ChatGPT and follow any big accounts posting screenshots of their own work or retweets of others’.